ScienceRocks

Democrat all the way!

- Thread starter

- Banned

- #341

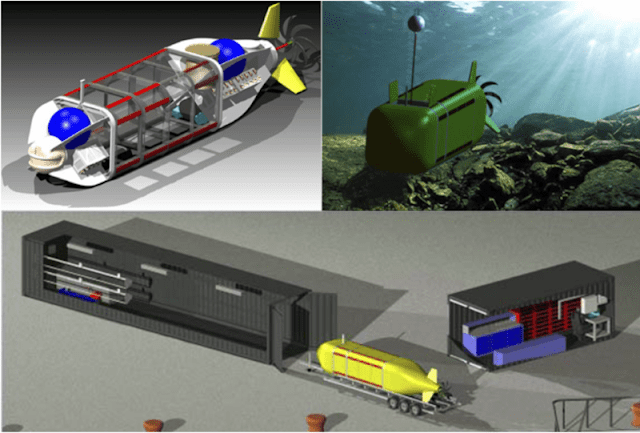

Boeing patents a drone that turns into a submarine

By Eric Mack - August 18, 2015 1 Picture

By Eric Mack - August 18, 2015 1 Picture

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

The Navy will building prototype large robotic submarines in 2015-2016 and testing in 2018. According to the Navy's ISR Capabilities Division, LDUUV will reach initial operating capability as a squadron by 2020 and full rate production by 2025. The US Navy has released requirements for its long duration large robotic submarine. (LDUUV -...

Arnold Schwarzenegger’s Terminator was science fiction — but so, too, is the idea that robots and software algorithms are guzzling jobs faster than they can be created. There is an astonishing mismatch between our fear of automation and the reality so far.

How can this be? The highways of Silicon Valley are sprinkled with self-driving cars. Visit the cinema, the supermarket or the bank and the most prominent staff you will see are the security guards, who are presumably there to prevent you stealing valuable machines. Your computer once contented itself with correcting your spelling; now it will translate your prose into Mandarin. Given all this, surely the robots must have stolen a job or two by now?

Of course, the answer is that automation has been destroying particular jobs in particular industries for a long time, which is why most westerners who weave clothes or cultivate and harvest crops by hand do so for fun. In the past that process made us richer.

The worry now is that, with computers making jobs redundant faster than we can generate new ones, the result is widespread unemployment, leaving a privileged class of robot-owning rentiers and highly paid workers with robot-compatible skills.

This idea is superficially plausible: we are surrounded by cheap, powerful computers; many people have lost their jobs in the past decade; and inequality has risen in the past 30 years.

But the theory can be put to a very simple test: how fast is productivity growing? The usual measure of productivity is output per hour worked — by a human. Robots can produce economic output without any hours of human labour at all, so a sudden onslaught of robot workers should cause a sudden acceleration in productivity.

Instead, productivity has been disappointing. In the US, labour productivity growth averaged an impressive 2.8 per cent per year from 1948 to 1973. The result was mass affluence rather than mass joblessness. Productivity then slumped for a generation and perked up in the late 1990s but has now sagged again. The picture is little better in the UK, where labour productivity is notoriously low compared with the other G7 leading economies, and it has been falling further behind since 2007.

Scientists trying to build a better robot are encouraged by the steps, however tentative, of a humanoid named Atlas.

In a video shown recently by Atlas’s makers, it is hard to miss the human in the humanoid as the 6-foot-2 machine takes a casual, careful stroll through the woods. It walks like a crouched limbo contestant (who perhaps imbibed one too many piña coladas), shuffles through a wooded area, tethered by a power cord, and then breaks into a more confident, foot-slapping walk when it reaches flat ground — much as a person would. Scientists hope to make an untethered version soon.

If you imagine lettuce as a crop planted in a field and tended by farmers, you're behind the times.

Kyoto-based vegetable factory operator Spread Co. said it will begin construction of what it calls a fully automated large-scale lettuce factory in spring 2016, which will be able to produce 30,000 heads of lettuce per day with "a push of a button."

The company isn't quite finished with the factory's development. According to Spread, there are six stages to growing a lettuce at a factory: seeding, germination, raising the seedlings, transplanting them into a larger bed, raising the vegetable and harvesting. The company said it is still working on a machine that can handle the seeding process. And it still requires human eyes to confirm germination. Beyond that, every process is automated, it says.

Stacker cranes are to carry the lettuce seedlings and hand them over to robots which will take care of transplanting them. Once fully grown, they will be harvested and delivered automatically to the factory's packaging line. The automated process will not only handle the lettuce but also control the temperature, humidity, level of carbon dioxide, sterilization of water and lighting hours, the company said.

Spread has seven years of experience in growing lettuce in its factories, with the produce being sold in 2,000 stores in Japan. While the lettuce is sold for about the same price as that grown in regular farms today, the company hopes to bring down costs. It says the factory lettuce tastes the same as lettuce grown outdoors.

Shipments from the fully automated lettuce factory are expected to begin in summer 2017

Construction workers on some sites are getting new, non-union help. SAM – short for semi-automated mason – is a robotic bricklayer being used to increase productivity as it works with human masons.

In this human-robot team, the robot is responsible for the more rote tasks: picking up bricks, applying mortar, and placing them in their designated location. A human handles the more nuanced activities, like setting up the worksite, laying bricks in tricky areas, such as corners, and handling aesthetic details, like cleaning up excess mortar.

Even in completing repetitive tasks, SAM still has to be fairly adaptable. It’s able to complete precise and level work while mounted on a scaffold that sways slightly in the wind. The robot can correct for the differences between theoretical building specifications and what’s actually on site, says Scott Peters, cofounder of Construction Robotics, a company based in Victor, New York, that designed SAM as its debut product.

Having trained Giraffe, the final step is to test it and here the results make for interesting reading. Lai tested his machine on a standard database called the Strategic Test Suite, which consists of 1,500 positions that are chosen to test an engine’s ability to recognize different strategic ideas. “For example, one theme tests the understanding of control of open files, another tests the understanding of how bishop and knight’s values change relative to each other in different situations, and yet another tests the understanding of center control,” he says.

The results of this test are scored out of 15,000.

Lai uses this to test the machine at various stages during its training. As the bootstrapping process begins, Giraffe quickly reaches a score of 6,000 and eventually peaks at 9,700 after only 72 hours. Lai says that matches the best chess engines in the world.

“[That] is remarkable because their evaluation functions are all carefully hand-designed behemoths with hundreds of parameters that have been tuned both manually and automatically over several years, and many of them have been worked on by human grandmasters,” he adds.

....

“Unlike most chess engines in existence today, Giraffe derives its playing strength not from being able to see very far ahead, but from being able to evaluate tricky positions accurately, and understanding complicated positional concepts that are intuitive to humans, but have been elusive to chess engines for a long time,” says Lai. “This is especially important in the opening and end game phases, where it plays exceptionally well.”

And this is only the start. Lai says it should be straightforward to apply the same approach to other games. One that stands out is the traditional Chinese game of Go, where humans still hold an impressive advantage over their silicon competitors. Perhaps Lai could have a crack at that next.

Every language has its own collection of phonemes, or the basic phonetic units from which spoken words are composed. Depending on how you count, English has somewhere between 35 and 45. Knowing a language's phonemes can make it much easier for automated systems to learn to interpret speech.

In the 2015 volume of Transactions of the Association for Computational Linguistics, MIT researchers describe a new machine-learning system that, like several systems before it, can learn to distinguish spoken words. But unlike its predecessors, it can also learn to distinguish lower-level phonetic units, such as syllables and phonemes.

As such, it could aid in the development of speech-processing systems for languages that are not widely spoken and don't have the benefit of decades of linguistic research on their phonetic systems. It could also help make speech-processing systems more portable, since information about lower-level phonetic units could help iron out distinctions between different speakers' pronunciations.

Unlike the machine-learning systems that led to, say, the speech recognition algorithms on today's smartphones, the MIT researchers' system is unsupervised, which means it acts directly on raw speech files: It doesn't depend on the laborious hand-annotation of its training data by human experts. So it could prove much easier to extend to new sets of training data and new languages.

Finally, the system could offer some insights into human speech acquisition. "When children learn a language, they don't learn how to write first," says Chia-ying Lee, who completed her PhD in computer science and engineering at MIT last year and is first author on the paper. "They just learn the language directly from speech. By looking at patterns, they can figure out the structures of language. That's pretty much what our paper tries to do."

Lee is joined on the paper by her former thesis advisor, Jim Glass, a senior research scientist at the Computer Science and Artificial Intelligence Laboratory and head of the Spoken Language Systems Group, and Timothy O'Donnell, a postdoc in the MIT Department of Brain and Cognitive Sciences.

Fujitsu R&D Center Co., Ltd. and Fujitsu Laboratories Ltd. (collectively Fujitsu) today announced the development of the world's first handwriting recognition technology by utilizing AI technology modeled on human brain processes to surpass a human equivalent recognition rate of 96.7%, that was established at a conference.

Fujitsu had previously achieved top-level accuracy in this field, as demonstrated by taking first place, with a recognition rate of 94.8%, at a handwritten Chinese character recognition contest(1) held at the International Conference on Document Analysis and Recognition (ICDAR), a top-level conference in the document image processing field. However, in order to further increase recognition accuracy, a new mechanism for studying the diversity of character deformations was required.

Now, with a focus on a hierarchical model of expanded connections between neurons, a model based on the human brain which grasps the features of the characters, Fujitsu has developed a technology to automatically create numerous patterns of character deformation from the character's base pattern, thereby "training" this hierarchical neural model. Using this method, Fujitsu has achieved an accuracy rate of 96.7%, surpassing the human equivalent recognition rate of 96.1% for handwritten Chinese characters(2).

Fujitsu expects that this technology will enable further automation of computer input and recognition.

Ordinarily, while humans can easily recognize media such as characters, images and sounds, for computers this recognition is much more difficult, due to both the many variations in shape, brightness and so on of the object to be recognized, as well as the existence of similar objects. This has become a central problem in artificial intelligence research.

Fujitsu has decades of experience in character recognition, with commercialized technologies used in such areas as Japan's finance and insurance fields for Japanese language, as well as a Chinese character recognition technology used by the Chinese government for 800 million handwritten census forms. Fujitsu started research using artificial intelligence based on deep learning for character recognition in 2010. In 2013, the character recognition technology developed on the basis of this artificial intelligence took first place (recognition rate of 94.8%) at a handwritten Chinese character recognition contest held at a top-level international contest in the document image processing field, achieving the highest accuracy in the field.

Do you really think it will only be low skilled jobs? Smart machines will be able to do accounting better the a person! A lawyer can only process and remember so much regulations and case law and computer can disseminate the entire NY penal code in seconds.That's what I've been trying to tell folks.. Low skilled sweat labor is pretty much dead. Those robot hamburglars are gonna have a ball flirting with the customers during the break.

But they'll always be jobs TEACHING robots to assemble customized or new menu items.

We have a MONSTROUS societal shift about to take place... As large and disruptive as the industrial revolution.. And all we want to talk about is how evil rich folks are responsible for taking all the jobs away.. We're being badly misled..

Do you really think it will only be low skilled jobs? Smart machines will be able to do accounting better the a person! A lawyer can only process and remember so much regulations and case law and computer can disseminate the entire NY penal code in seconds.That's what I've been trying to tell folks.. Low skilled sweat labor is pretty much dead. Those robot hamburglars are gonna have a ball flirting with the customers during the break.

But they'll always be jobs TEACHING robots to assemble customized or new menu items.

We have a MONSTROUS societal shift about to take place... As large and disruptive as the industrial revolution.. And all we want to talk about is how evil rich folks are responsible for taking all the jobs away.. We're being badly misled..

Will we need pilots, soldier, police etc? They will probably get replaced.

Teachers? Stock Brokers?

The list will go on and on. There will always be jobs, but fewer of them and the ever growing population.

Sent from my iPhone using Tapatalk

Roaming around a driving range retrieving the endless scattering of golf balls is a pretty tall order for staff, especially when you consider the bays full of weekend hackers taking aim at their caged buggies. But one company is looking to give golfers a smaller moving target to aim at. The Ball Picker robot autonomously scoots around sucking up golf balls and returns them to a ball dispenser to be teed up once again.