Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature currently requires accessing the site using the built-in Safari browser.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

OK... so why CO2 trails temperature?

- Thread starter catatomic

- Start date

- Status

- Not open for further replies.

- Nov 28, 2012

- 524

- 160

- 178

- Thread starter

- #2

I found this quote:

"As the Southern Ocean warms, the solubility of CO2 in water falls (Martin 2005). This causes the oceans to give up more CO2, emitting it into the atmosphere. The exact mechanism of how the deep ocean gives up its CO2 is not fully understood but believed to be related to vertical ocean mixing (Toggweiler 1999). The process takes around 800 to 1000 years, so CO2 levels are observed to rise around 1000 years after the initial warming (Monnin 2001, Mudelsee 2001).

The outgassing of CO2 from the ocean has several effects. The increased CO2 in the atmosphere amplifies the original warming. The relatively weak forcing from Milankovitch cycles is insufficient to cause the dramatic temperature change taking our climate out of an ice age (this period is called a deglaciation). However, the amplifying effect of CO2 is consistent with the observed warming. "

"As the Southern Ocean warms, the solubility of CO2 in water falls (Martin 2005). This causes the oceans to give up more CO2, emitting it into the atmosphere. The exact mechanism of how the deep ocean gives up its CO2 is not fully understood but believed to be related to vertical ocean mixing (Toggweiler 1999). The process takes around 800 to 1000 years, so CO2 levels are observed to rise around 1000 years after the initial warming (Monnin 2001, Mudelsee 2001).

The outgassing of CO2 from the ocean has several effects. The increased CO2 in the atmosphere amplifies the original warming. The relatively weak forcing from Milankovitch cycles is insufficient to cause the dramatic temperature change taking our climate out of an ice age (this period is called a deglaciation). However, the amplifying effect of CO2 is consistent with the observed warming. "

SSDD

Gold Member

- Nov 6, 2012

- 16,672

- 1,966

- 280

Can you show me any actual measured data which establishes a coherent relationship between the absorption of infrared radiation by a gas, and warming in the atmosphere?

Go to a couple of sites where pushing a climate agenda is not the mission and look at some actual science...go to, for example, a few technical sites where they are talking about how infrared heaters work...literally millions of hours of observation, measurement, and industrial application have shown that infrared radiation can not, and does not warm the air...and if IR does not warm the air, what does that do to the radiative greenhouse hypothesis being pushed by climate science?

Infrared radiation warms objects...those objects can then warm the air via conduction...but infrared radiation?...no...it does not warm the air. There is no data that establishes a coherent relationship between the absorption of IR by a gas and warming in the atmosphere because there is no coherent relationship between the absorption of IR by a gas and warming in the atmosphere.

Go to a couple of sites where pushing a climate agenda is not the mission and look at some actual science...go to, for example, a few technical sites where they are talking about how infrared heaters work...literally millions of hours of observation, measurement, and industrial application have shown that infrared radiation can not, and does not warm the air...and if IR does not warm the air, what does that do to the radiative greenhouse hypothesis being pushed by climate science?

Infrared radiation warms objects...those objects can then warm the air via conduction...but infrared radiation?...no...it does not warm the air. There is no data that establishes a coherent relationship between the absorption of IR by a gas and warming in the atmosphere because there is no coherent relationship between the absorption of IR by a gas and warming in the atmosphere.

Billy_Bob

Diamond Member

The outgassing of CO2 from the ocean has several effects. The increased CO2 in the atmosphere amplifies the original warming. The relatively weak forcing from Milankovitch cycles is insufficient to cause the dramatic temperature change taking our climate out of an ice age (this period is called a deglaciation). However, the amplifying effect of CO2 is consistent with the observed warming.

1. Earths tilt on its axis (eccentricity and solar angle of incidence ) is what causes ice ages. The amount of solar energy entering the earths atmosphere at a greater angle will lose energy hitting the surface. This is what causes earths oceans to warm and cool. Milankovitch cycles can fully cause glaciation despite the suns output or the level of CO2 in our atmosphere.

2. The solubility of CO2 does indeed decrease as temperature rises in H2O. The effect of warming is not fully known nor is the process of water churn in the oceans.

3. Their "analysis" that CO2 must be it, is so far off it isn't funny. Its so wrong that I cant describe just how wrong it is. It is based on pure conjecture and modeling that fails without exception.

LWIR can not penetrate ocean water beyond its skin layer where it is immediately shed. In fact the water just below the skin is cooler than the water below it due to evaporation and the cooling it causes.

The CAGW theory has so many holes in it the boat will sink and has every time the model is placed in water...

Billy_Bob

Diamond Member

The next time you waffle all you need to do is ask yourself how the earth entered and left a glacial cycle with CO2 levels at or above 7,000ppm..Thank you for your help. I have been waffling as of late.

And when we get to a resolution where you can see them.....

This pattern is right in line with Milankovitch cycles and lays waste to any credible CO2 fantasy.

Exactly ! That`s the whole point of using infrared heaters in large buildings and you don`t want to waste most of the power to heat the airCan you show me any actual measured data which establishes a coherent relationship between the absorption of infrared radiation by a gas, and warming in the atmosphere?

Go to a couple of sites where pushing a climate agenda is not the mission and look at some actual science...go to, for example, a few technical sites where they are talking about how infrared heaters work...literally millions of hours of observation, measurement, and industrial application have shown that infrared radiation can not, and does not warm the air...and if IR does not warm the air, what does that do to the radiative greenhouse hypothesis being pushed by climate science?

Infrared radiation warms objects...those objects can then warm the air via conduction...but infrared radiation?...no...it does not warm the air. There is no data that establishes a coherent relationship between the absorption of IR by a gas and warming in the atmosphere because there is no coherent relationship between the absorption of IR by a gas and warming in the atmosphere.

SSDD

Gold Member

- Nov 6, 2012

- 16,672

- 1,966

- 280

Exactly ! That`s the whole point of using infrared heaters in large buildings and you don`t want to waste most of the power to heat the airCan you show me any actual measured data which establishes a coherent relationship between the absorption of infrared radiation by a gas, and warming in the atmosphere?

Go to a couple of sites where pushing a climate agenda is not the mission and look at some actual science...go to, for example, a few technical sites where they are talking about how infrared heaters work...literally millions of hours of observation, measurement, and industrial application have shown that infrared radiation can not, and does not warm the air...and if IR does not warm the air, what does that do to the radiative greenhouse hypothesis being pushed by climate science?

Infrared radiation warms objects...those objects can then warm the air via conduction...but infrared radiation?...no...it does not warm the air. There is no data that establishes a coherent relationship between the absorption of IR by a gas and warming in the atmosphere because there is no coherent relationship between the absorption of IR by a gas and warming in the atmosphere.

Ever stood on a ski slope on a sunny day...air temperatures close to or below freezing..surrounded by snow and ice and still comfortable in your shirt sleeves? Radiation warming your body but not the air...the whole belief that CO2 can somehow warm the atmosphere is pure magical thinking...millions of hours of observation, measurement, industrial and residential application prove that IR doesn't warm the air and yet they believe...not based on scientific evidence, but on political ideology.

Exactly ! That`s the whole point of using infrared heaters in large buildings and you don`t want to waste most of the power to heat the airCan you show me any actual measured data which establishes a coherent relationship between the absorption of infrared radiation by a gas, and warming in the atmosphere?

Go to a couple of sites where pushing a climate agenda is not the mission and look at some actual science...go to, for example, a few technical sites where they are talking about how infrared heaters work...literally millions of hours of observation, measurement, and industrial application have shown that infrared radiation can not, and does not warm the air...and if IR does not warm the air, what does that do to the radiative greenhouse hypothesis being pushed by climate science?

Infrared radiation warms objects...those objects can then warm the air via conduction...but infrared radiation?...no...it does not warm the air. There is no data that establishes a coherent relationship between the absorption of IR by a gas and warming in the atmosphere because there is no coherent relationship between the absorption of IR by a gas and warming in the atmosphere.

Ever stood on a ski slope on a sunny day...air temperatures close to or below freezing..surrounded by snow and ice and still comfortable in your shirt sleeves? Radiation warming your body but not the air...the whole belief that CO2 can somehow warm the atmosphere is pure magical thinking...millions of hours of observation, measurement, industrial and residential application prove that IR doesn't warm the air and yet they believe...not based on scientific evidence, but on political ideology.

Hahahaha. The shallowness of your thinking never ceases to amaze me!

You take an out-of-context, or unrepresentative example, and generalize it across the board.

Sure, someone might be comfortable at 0C on a calm and sunny day receiving direct and indirect sunshine. The same person would be pretty unhappy at 10C on a cloudy, windy day.

Central heating became popular because of economy of scale and ease of use. Forced air is quick but inefficient because hot air leaks out. Hot water radiant is slower but more efficient. Both use a convection system to move heat from a central source to a distant location.

There has lately been a movement away from Central heating because wall or ceiling mounted electrical radiant panels have become more efficient and effective than old style electric baseboards at floor level on outside walls. Presumably the gap between the price of fossil fuels and electricity is now low enough to make it economically viable. At least until 'free' renewable energy jacks up the price up here as it already has in other parts of the world.

Yes, radiation is a poor and inefficient way of warming the air. It is also a very poor and inefficient way of cooling the air. The small amount of energy absorbed from the surface is still larger than the amount radiated to space from the cooler heights up in the atmosphere.

The Earth only cools by radiation loss to space. Conduction and convection are mediated by mass. There is no mass in space therefore no heat loss by conduction or convection. It does not matter that conduction and convection are efficient at moving energy from one area of mass to another if it can't escape.

Most of the radiation lost by the Earth system is by wavelengths that pass through the atmosphere as if it wasn't there, it is transmitted rather than absorbed or reflected. That happens from the surface, and secondarily at the cloudtops where condensation releases latent heat via liquid or solid water precipitation. The clouds radiate in all directions so only roughly half escapes to space.

Anytime energy deviates from directly escaping from the surface to space there is less energy loss. The difference is stored in the atmosphere. That stored energy increases the temperature of the air, which then increases the temperature of the surface, which then increases the amount of directly escaping radiation until it matches the solar energy input.

There is no way around it. Still don't believe it? Fine, then explain why there is missing radiation from the top of the atmosphere in exactly the same wavelengths that GHGs are known to absorb. Don't believe that the energy is being stored in the atmosphere and returned to the surface? Fine, then explain how the surface is radiating at a higher output than the solar input.

I personally don't think CO2 is the control knob of the climate system but I certainly think it is one of the factors. Data proves it, science explains the mechanism. I disagree with the consensus climate science claims for the feedbacks because the data disagrees and the science doesn't come close to being able to explain the mechanics of the water cycle and clouds.

SSDD

Gold Member

- Nov 6, 2012

- 16,672

- 1,966

- 280

Exactly ! That`s the whole point of using infrared heaters in large buildings and you don`t want to waste most of the power to heat the airCan you show me any actual measured data which establishes a coherent relationship between the absorption of infrared radiation by a gas, and warming in the atmosphere?

Go to a couple of sites where pushing a climate agenda is not the mission and look at some actual science...go to, for example, a few technical sites where they are talking about how infrared heaters work...literally millions of hours of observation, measurement, and industrial application have shown that infrared radiation can not, and does not warm the air...and if IR does not warm the air, what does that do to the radiative greenhouse hypothesis being pushed by climate science?

Infrared radiation warms objects...those objects can then warm the air via conduction...but infrared radiation?...no...it does not warm the air. There is no data that establishes a coherent relationship between the absorption of IR by a gas and warming in the atmosphere because there is no coherent relationship between the absorption of IR by a gas and warming in the atmosphere.

Ever stood on a ski slope on a sunny day...air temperatures close to or below freezing..surrounded by snow and ice and still comfortable in your shirt sleeves? Radiation warming your body but not the air...the whole belief that CO2 can somehow warm the atmosphere is pure magical thinking...millions of hours of observation, measurement, industrial and residential application prove that IR doesn't warm the air and yet they believe...not based on scientific evidence, but on political ideology.

Hahahaha. The shallowness of your thinking never ceases to amaze me!

You take an out-of-context, or unrepresentative example, and generalize it across the board.

Sure, someone might be comfortable at 0C on a calm and sunny day receiving direct and indirect sunshine. The same person would be pretty unhappy at 10C on a cloudy, windy day.

Central heating became popular because of economy of scale and ease of use. Forced air is quick but inefficient because hot air leaks out. Hot water radiant is slower but more efficient. Both use a convection system to move heat from a central source to a distant location.

There has lately been a movement away from Central heating because wall or ceiling mounted electrical radiant panels have become more efficient and effective than old style electric baseboards at floor level on outside walls. Presumably the gap between the price of fossil fuels and electricity is now low enough to make it economically viable. At least until 'free' renewable energy jacks up the price up here as it already has in other parts of the world.

Yes, radiation is a poor and inefficient way of warming the air. It is also a very poor and inefficient way of cooling the air. The small amount of energy absorbed from the surface is still larger than the amount radiated to space from the cooler heights up in the atmosphere.

The Earth only cools by radiation loss to space. Conduction and convection are mediated by mass. There is no mass in space therefore no heat loss by conduction or convection. It does not matter that conduction and convection are efficient at moving energy from one area of mass to another if it can't escape.

Most of the radiation lost by the Earth system is by wavelengths that pass through the atmosphere as if it wasn't there, it is transmitted rather than absorbed or reflected. That happens from the surface, and secondarily at the cloudtops where condensation releases latent heat via liquid or solid water precipitation. The clouds radiate in all directions so only roughly half escapes to space.

Anytime energy deviates from directly escaping from the surface to space there is less energy loss. The difference is stored in the atmosphere. That stored energy increases the temperature of the air, which then increases the temperature of the surface, which then increases the amount of directly escaping radiation until it matches the solar energy input.

There is no way around it. Still don't believe it? Fine, then explain why there is missing radiation from the top of the atmosphere in exactly the same wavelengths that GHGs are known to absorb. Don't believe that the energy is being stored in the atmosphere and returned to the surface? Fine, then explain how the surface is radiating at a higher output than the solar input.

I personally don't think CO2 is the control knob of the climate system but I certainly think it is one of the factors. Data proves it, science explains the mechanism. I disagree with the consensus climate science claims for the feedbacks because the data disagrees and the science doesn't come close to being able to explain the mechanics of the water cycle and clouds.

Radiation is a poor way to warm the air because it doesn't warm air...It is inefficient because if you want to use radiation to warm the air, you have to heat the objects in a room and wait for the energy absorbed by the objects in the room and the walls to conduct that energy to the air.

Your belief that there is a radiative greenhouse effect is belief in magic ian...there is no radiative greenhouse effect as described by climate science..

And what data proves it ian? Model data? magical model data? there isn't the first piece of observed, measured evidence which establishes a coherent relationship between the absorption of IR by a gas and warming in the atmosphere...and there isn't the first piece of real data that suggests that CO2 has any effect on climate whatsoever. But if you believe there is, by all means lets see it...my bet is that if you post something it will be little more than additional evidence of how easily you are fooled by instrumentation.

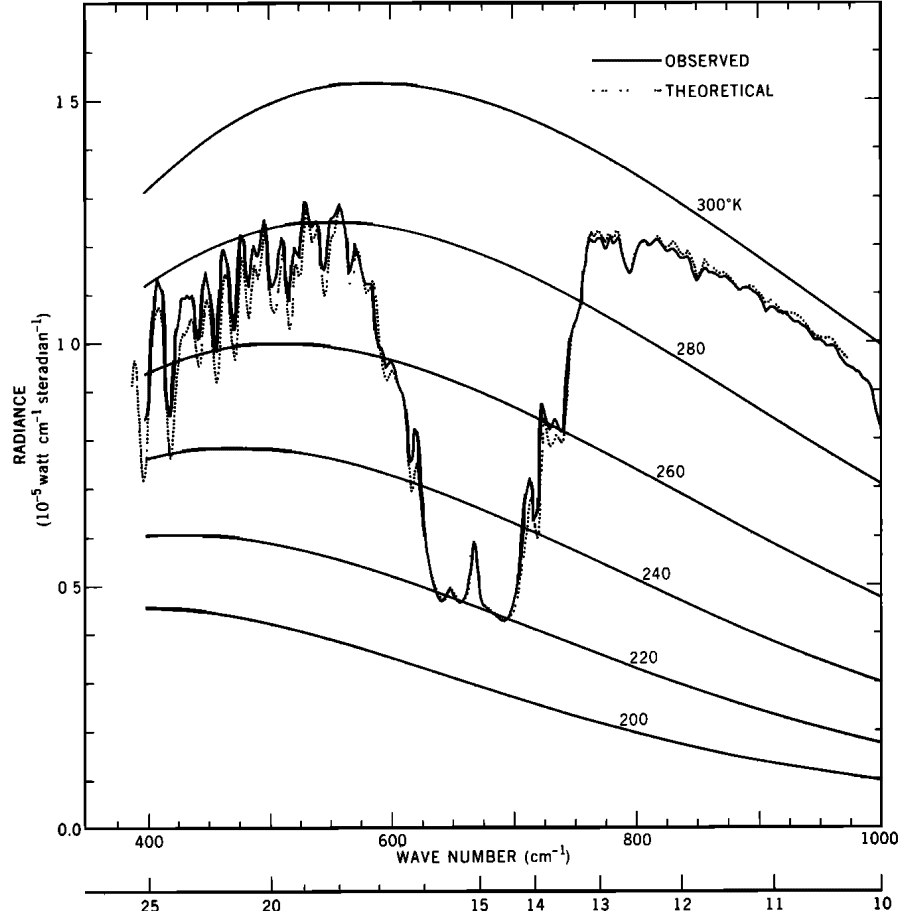

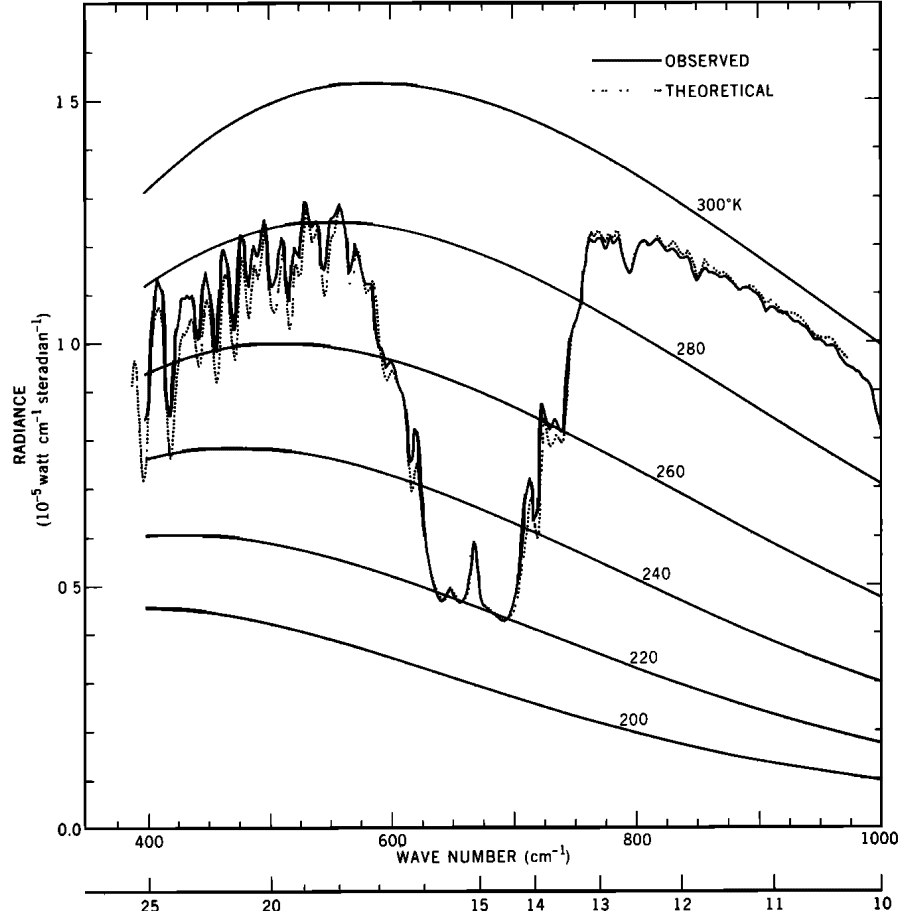

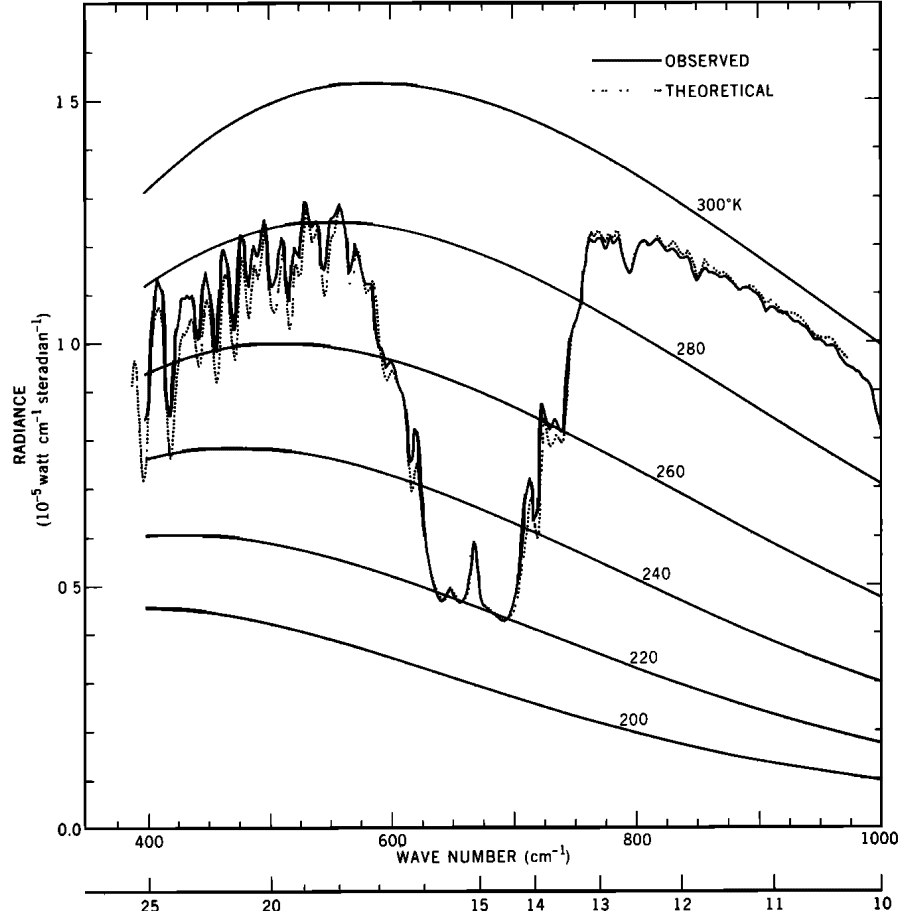

Here is a graph from 1970, zoomed in on the CO2 band. To be honest I haven't checked the provenance of Conrath1970. It comes from a time before the CAGW scare, yet the primitive satellite data and the atmospheric radiative modeling are practically indistinguishable from recent results.

The range between 13-10 microns is in the Atmospheric Window where radiation escapes freely, so this snapshot is from the tropics with a surface temperature in the mid 20's Celcius, or about 295 Kelvins.

CO2 absorbs all the surface radiation in the 14-16 micron band, and doesn't release it until the air about 220K, or -53C. More radiation is absorbed than emitted in the CO2 band. That difference in radiation energy must be accounted for.

Water is responsible for the missing radiation in the band from 18 microns upward. It is no coincidence that the H2O absorbed radiation is released at a height that corresponds to the freezing point of water, where water precipitates and returns to the surface. Again more surface energy is absorbed by H2O than is emitted at a higher, cooler level. That difference in energy must be accounted for.

I say the missing energy is stored in the atmosphere, warming it. The warmer atmosphere warms the surface, causing more radiation in the Atmospheric Window, which can escape to space and cause cooling.

Without CO2 in the air, the Atmospheric Window would be wider. More radiation would escape freely, and less energy would be stored in the atmosphere. Both the surface and the air would be cooler.

SSDD makes many claims but never backs them up with explanations. He says conduction and convection are more efficient at moving energy around than is radiation. That is true, but he refuses to acknowledge that radiation is the ONLY way to shed energy to space.

The above graph shows a deficit of energy being shed in the GHG bands. If he has some alternate way of getting rid of the solar energy coming in then he should point it out.

The range between 13-10 microns is in the Atmospheric Window where radiation escapes freely, so this snapshot is from the tropics with a surface temperature in the mid 20's Celcius, or about 295 Kelvins.

CO2 absorbs all the surface radiation in the 14-16 micron band, and doesn't release it until the air about 220K, or -53C. More radiation is absorbed than emitted in the CO2 band. That difference in radiation energy must be accounted for.

Water is responsible for the missing radiation in the band from 18 microns upward. It is no coincidence that the H2O absorbed radiation is released at a height that corresponds to the freezing point of water, where water precipitates and returns to the surface. Again more surface energy is absorbed by H2O than is emitted at a higher, cooler level. That difference in energy must be accounted for.

I say the missing energy is stored in the atmosphere, warming it. The warmer atmosphere warms the surface, causing more radiation in the Atmospheric Window, which can escape to space and cause cooling.

Without CO2 in the air, the Atmospheric Window would be wider. More radiation would escape freely, and less energy would be stored in the atmosphere. Both the surface and the air would be cooler.

SSDD makes many claims but never backs them up with explanations. He says conduction and convection are more efficient at moving energy around than is radiation. That is true, but he refuses to acknowledge that radiation is the ONLY way to shed energy to space.

The above graph shows a deficit of energy being shed in the GHG bands. If he has some alternate way of getting rid of the solar energy coming in then he should point it out.

I didn't read all of this...but it all has to do with the ocean's ability to absorb it. And temperature affects it's ability to do that.

Hopefully that was more clear than 18 paragraphs.

Hopefully that was more clear than 18 paragraphs.

I didn't read all of this...but it all has to do with the ocean's ability to absorb it. And temperature affects it's ability to do that.

Hopefully that was more clear than 18 paragraphs.

Sure, there is some connections between temperature and the ocean's ability to absorb CO2 and convert it into different forms. But the anthropogenic addition is swamping that effect.

Wuwei

Gold Member

- Apr 18, 2015

- 5,196

- 1,083

- 255

That is one of the best explanations that I have seen concerning what is happening at the TOA.Here is a graph from 1970, zoomed in on the CO2 band......

Billy_Bob

Diamond Member

CO2 absorbs all the surface radiation in the 14-16 micron band, and doesn't release it until the air about 220K, or -53C. More radiation is absorbed than emitted in the CO2 band. That difference in radiation energy must be accounted for.

CO2 does not warm except by conduction in our atmosphere. So it MUST collide in order to gain kinetic energy and warm. This means it must have another molecule that can hold energy and warm in order for it to warm.

IF you remove water vapor from the atmosphere, cooling is more rapid with higher concentrations of CO2 as we have observed in earths desert regions. Warming is also quicker, of the solids, which then heats the atmosphere above it quickly by conduction. Observed evidence shows that the air is not warming until it interacts with the warmed solids of earths surface. This is well document fact.

SO the question then becomes, can convection and air movement transfer the energy necessary to keep a "hot spot" from forming. That answer is a resounding YES, from all empirical observations to date.

When you consider that an Anvil cloud formation of 20,000 feet (top to bottom) can churn its top to bottom in a matter of about one minuet. It clearly demonstrates that there is sufficient churn in earths atmosphere at any given time to keep a mid to upper tropospheric hot spot from ever forming. Wind speeds within the cloud formation can reach 200-250 mph.

We know from observations that water vapor can hold energy for upwards of 6-9 seconds before it cools enough to be released at a longer wave length and outside of CO2's ability to slow. All of the current GCM's do not account for this shift in energy output. This is precisely where your "missing energy" is and it is not missing. Water vapor is an interesting thing to study, energy residency time is key to this issue.

All your graphing proves is the major regions of energy release are outside of CO2's ability to affect it.

CO2 absorbs all the surface radiation in the 14-16 micron band, and doesn't release it until the air about 220K, or -53C. More radiation is absorbed than emitted in the CO2 band. That difference in radiation energy must be accounted for.

CO2 does not warm except by conduction in our atmosphere. So it MUST collide in order to gain kinetic energy and warm. This means it must have another molecule that can hold energy and warm in order for it to warm.

IF you remove water vapor from the atmosphere, cooling is more rapid with higher concentrations of CO2 as we have observed in earths desert regions. Warming is also quicker, of the solids, which then heats the atmosphere above it quickly by conduction. Observed evidence shows that the air is not warming until it interacts with the warmed solids of earths surface. This is well document fact.

SO the question then becomes, can convection and air movement transfer the energy necessary to keep a "hot spot" from forming. That answer is a resounding YES, from all empirical observations to date.

When you consider that an Anvil cloud formation of 20,000 feet (top to bottom) can churn its top to bottom in a matter of about one minuet. It clearly demonstrates that there is sufficient churn in earths atmosphere at any given time to keep a mid to upper tropospheric hot spot from ever forming. Wind speeds within the cloud formation can reach 200-250 mph.

We know from observations that water vapor can hold energy for upwards of 6-9 seconds before it cools enough to be released at a longer wave length and outside of CO2's ability to slow. All of the current GCM's do not account for this shift in energy output. This is precisely where your "missing energy" is and it is not missing. Water vapor is an interesting thing to study, energy residency time is key to this issue.

All your graphing proves is the major regions of energy release are outside of CO2's ability to affect it.

There you go again. Another spew of Cliff Clavin bafflegab. You make no sense, there are no coherent thoughts. Just sciencey words strung together.

I have been discussing the basics, radiation input and radiation output. If they are equal for the terrestrial system of Earth and atmosphere then no change happens in the stored energy, no change in the overall temperature.

I tend to focus more on the IR radiation out side of that equation because of the simple CO2 connection.

But we could certainly discuss the other side if you want. The basic Solar output is amazingly stable but even small changes such as the Maunder Minimum seemingly caused the Little Ice Age. Or because the reflected sunlight is also part of the terrestrial system output, we could discuss the effect of clouds on albedo. There doesn't even have to be more clouds, just changing the timing of cloud formation affects the amount of solar insolation absorbed by the surface.

There are many topics we could discuss but unless you couch them in terms of how they affect the radiation balance they are just factoids that have little meaning.

The first thing to get in your head is that no matter how much more efficient conduction and convection is in moving energy around compared to radiation, it is only radiation that can shed energy to space.

The second most important thing to remember is that any imbalance of radiation input and output leads to storage or release of energy, changing the total heat content of the system.

GHGs restricted radiation loss and caused energy to be stored in the system, warming it until the input and output returned to equilibrium.

Hahaha so why don`t you use that graph and figure out how ridiculously small the watts per steradian for the center of that band ~ 675 cm^-1 is?Here is a graph from 1970, zoomed in on the CO2 band. To be honest I haven't checked the provenance of Conrath1970. It comes from a time before the CAGW scare, yet the primitive satellite data and the atmospheric radiative modeling are practically indistinguishable from recent results.

The range between 13-10 microns is in the Atmospheric Window where radiation escapes freely, so this snapshot is from the tropics with a surface temperature in the mid 20's Celcius, or about 295 Kelvins.

CO2 absorbs all the surface radiation in the 14-16 micron band, and doesn't release it until the air about 220K, or -53C. More radiation is absorbed than emitted in the CO2 band. That difference in radiation energy must be accounted for.

Water is responsible for the missing radiation in the band from 18 microns upward. It is no coincidence that the H2O absorbed radiation is released at a height that corresponds to the freezing point of water, where water precipitates and returns to the surface. Again more surface energy is absorbed by H2O than is emitted at a higher, cooler level. That difference in energy must be accounted for.

I say the missing energy is stored in the atmosphere, warming it. The warmer atmosphere warms the surface, causing more radiation in the Atmospheric Window, which can escape to space and cause cooling.

Without CO2 in the air, the Atmospheric Window would be wider. More radiation would escape freely, and less energy would be stored in the atmosphere. Both the surface and the air would be cooler.

SSDD makes many claims but never backs them up with explanations. He says conduction and convection are more efficient at moving energy around than is radiation. That is true, but he refuses to acknowledge that radiation is the ONLY way to shed energy to space.

The above graph shows a deficit of energy being shed in the GHG bands. If he has some alternate way of getting rid of the solar energy coming in then he should point it out.

The entire sphere would be 4pi times that number and only 1/2 of that (1/2 the 4pi*r^2) gets radiated down!

Try heating something with a heat (radiation) source that radiates a "grand" total of 0.042 watts and I don`t care how close you move it to whatever you want to heat. That`s less power that the IR LED in a computer mouse.

Wow according to you my mouse pad should be a lot warmer where the mouse is parked.

Billy_Bob

Diamond Member

You wont even discuss the OBSERVATIONS and why they are and where the energy has moved...CO2 absorbs all the surface radiation in the 14-16 micron band, and doesn't release it until the air about 220K, or -53C. More radiation is absorbed than emitted in the CO2 band. That difference in radiation energy must be accounted for.

CO2 does not warm except by conduction in our atmosphere. So it MUST collide in order to gain kinetic energy and warm. This means it must have another molecule that can hold energy and warm in order for it to warm.

IF you remove water vapor from the atmosphere, cooling is more rapid with higher concentrations of CO2 as we have observed in earths desert regions. Warming is also quicker, of the solids, which then heats the atmosphere above it quickly by conduction. Observed evidence shows that the air is not warming until it interacts with the warmed solids of earths surface. This is well document fact.

SO the question then becomes, can convection and air movement transfer the energy necessary to keep a "hot spot" from forming. That answer is a resounding YES, from all empirical observations to date.

When you consider that an Anvil cloud formation of 20,000 feet (top to bottom) can churn its top to bottom in a matter of about one minuet. It clearly demonstrates that there is sufficient churn in earths atmosphere at any given time to keep a mid to upper tropospheric hot spot from ever forming. Wind speeds within the cloud formation can reach 200-250 mph.

We know from observations that water vapor can hold energy for upwards of 6-9 seconds before it cools enough to be released at a longer wave length and outside of CO2's ability to slow. All of the current GCM's do not account for this shift in energy output. This is precisely where your "missing energy" is and it is not missing. Water vapor is an interesting thing to study, energy residency time is key to this issue.

All your graphing proves is the major regions of energy release are outside of CO2's ability to affect it.

Your so set on "consensus science" you will not look beyond your blinders. I cant fix Stupid.. Enjoy ignorance...

Yeah and none of them even want to consider how many watts from the sun the CO2 prevents from getting to the surface. According to the warmers that does not matter because you can make up for a power loss with back radiating a tiny fraction of what`s left over and a portion of the total # of watts the CO2 absorbed from the ground black body radiation. The cheat is to use the entire # of watts instead of the integral portion from 14 to 16 microns. My guess is that these "scientists" do that because none of them have a clue how to get the integral of a plotted function....and call all those who do know "science deniers".You wont even discuss the OBSERVATIONS and why they are and where the energy has moved...CO2 absorbs all the surface radiation in the 14-16 micron band, and doesn't release it until the air about 220K, or -53C. More radiation is absorbed than emitted in the CO2 band. That difference in radiation energy must be accounted for.

CO2 does not warm except by conduction in our atmosphere. So it MUST collide in order to gain kinetic energy and warm. This means it must have another molecule that can hold energy and warm in order for it to warm.

IF you remove water vapor from the atmosphere, cooling is more rapid with higher concentrations of CO2 as we have observed in earths desert regions. Warming is also quicker, of the solids, which then heats the atmosphere above it quickly by conduction. Observed evidence shows that the air is not warming until it interacts with the warmed solids of earths surface. This is well document fact.

SO the question then becomes, can convection and air movement transfer the energy necessary to keep a "hot spot" from forming. That answer is a resounding YES, from all empirical observations to date.

When you consider that an Anvil cloud formation of 20,000 feet (top to bottom) can churn its top to bottom in a matter of about one minuet. It clearly demonstrates that there is sufficient churn in earths atmosphere at any given time to keep a mid to upper tropospheric hot spot from ever forming. Wind speeds within the cloud formation can reach 200-250 mph.

We know from observations that water vapor can hold energy for upwards of 6-9 seconds before it cools enough to be released at a longer wave length and outside of CO2's ability to slow. All of the current GCM's do not account for this shift in energy output. This is precisely where your "missing energy" is and it is not missing. Water vapor is an interesting thing to study, energy residency time is key to this issue.

All your graphing proves is the major regions of energy release are outside of CO2's ability to affect it.

Your so set on "consensus science" you will not look beyond your blinders. I cant fix Stupid.. Enjoy ignorance...

Like for example Heinz Hug:

The Climate Catastrophe - A Spectroscopic Artifact

We integrated from a value E = 3 (above which absorption deems negligible, related to the way through the whole troposphere) until the ends (E = 0) of the R- and P-branch. So the edges are fully considered. They start at 14.00 µm for the P-branch and at 15.80 µm for the R-branch, going down to the base line E=0. IPCC starts with 13.7 and 16 µm [13]. For the 15 µm band our result was:

The radiative forcing for doubling can be calculated by using this figure. If we allocate an absorption of 32 W/m2 [14] over 180º steradiant to the total integral (area) of the n3 band as observed from satellite measurements (Hanel et al., 1971) and applied to a standard atmosphere, and take an increment of 0.17%, the absorption is 0.054 W/m2 - and not 4.3 W/m2.

This is roughly 80 times less than IPCC's radiative forcing.

Billy_Bob

Diamond Member

Ok here is little experiment for all you "consensus science" folks..

You have two tubes 10 meters long. One tube is 1.5 meters in diameter and the second is 1.65 meters in diameter.

IN the first tube you place earths atmospheric content WITHOUT WATER VAPOR (0 humidity) and you seal it. The second tube is placed over the first and the space between them equalized, filled with nitrogen or argon gas. The tube as a whole is allowed to rise to room temperature and the room is controlled to within one degree temp.

Inside the inner tube you will place two sensors. 1 at 4meters and one at 9 meters from one end making sure they do not react (warm the sensor)to LWIR radiation. This means it will measure the conduction and the ambient air temp correctly. The ends are opaque to LWIR in the bands of 12-20 um(full length of the CO2 atmospheric window). Verify that your narrow band energy source in the only input to the Tube and you will direct 200w/m^2 from the narrow band source through the tubes long axis.

The outer tube will act as a thermal blanket allowing any energy reaction to be measured inside the inner tube. Now turn on your LWIR energy source and measure the temp inside the tubing over time.

I've done this experiment several times and with up to 1200w/m^2. The inner tube never warms.. there is no temperature differential between the two sensors.

Can you tell me why it does not warm? Can you tell me why there is no temperature differential? (distance from energy source)

You have two tubes 10 meters long. One tube is 1.5 meters in diameter and the second is 1.65 meters in diameter.

IN the first tube you place earths atmospheric content WITHOUT WATER VAPOR (0 humidity) and you seal it. The second tube is placed over the first and the space between them equalized, filled with nitrogen or argon gas. The tube as a whole is allowed to rise to room temperature and the room is controlled to within one degree temp.

Inside the inner tube you will place two sensors. 1 at 4meters and one at 9 meters from one end making sure they do not react (warm the sensor)to LWIR radiation. This means it will measure the conduction and the ambient air temp correctly. The ends are opaque to LWIR in the bands of 12-20 um(full length of the CO2 atmospheric window). Verify that your narrow band energy source in the only input to the Tube and you will direct 200w/m^2 from the narrow band source through the tubes long axis.

The outer tube will act as a thermal blanket allowing any energy reaction to be measured inside the inner tube. Now turn on your LWIR energy source and measure the temp inside the tubing over time.

I've done this experiment several times and with up to 1200w/m^2. The inner tube never warms.. there is no temperature differential between the two sensors.

Can you tell me why it does not warm? Can you tell me why there is no temperature differential? (distance from energy source)

Last edited:

- Status

- Not open for further replies.

Similar threads

- Replies

- 97

- Views

- 2K

- Replies

- 11

- Views

- 165

- Replies

- 43

- Views

- 462

- Replies

- 53

- Views

- 422

Latest Discussions

- Replies

- 199

- Views

- 4K

- Replies

- 1

- Views

- 2

Forum List

-

-

-

-

-

Political Satire 8051

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

ObamaCare 781

-

-

-

-

-

-

-

-

-

-

-

Member Usernotes 469

-

-

-

-

-

-

-

-

-

-