I found a sleeper.

This is huge, and no one even knows about it.

www.nature.com

www.nature.com

You have to read through this in detail. There's a lot of math, but it's not too hard and it's intuitive. Mostly you have to understand how they build the "tag vector".

What they're showing us here, is pretty astounding. It answers a lot of questions all at the same time.

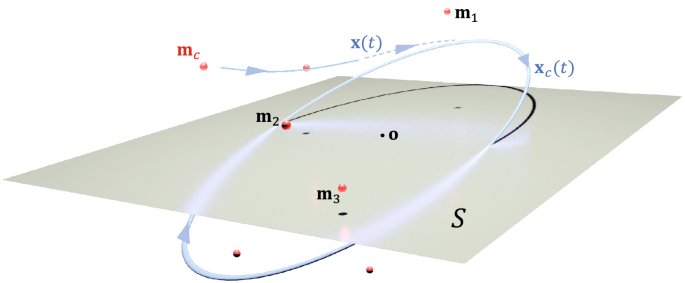

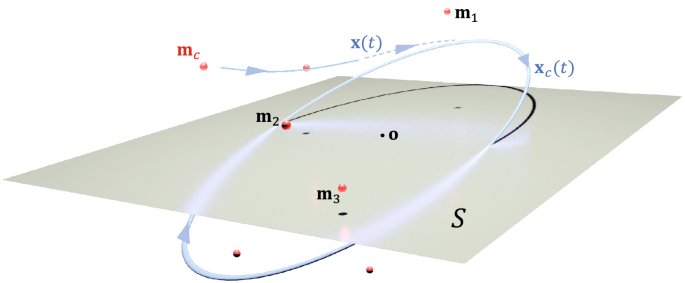

This model is showing us how memories are stored and retrieved in the brain.

And why "brain waves" are important for that.

STDP stands for "spike timing dependent plasticity", and is only slightly different from a Hebbian learning rule. The idea is synapses get reinforced on the basis of correlations between input and output.im this particular case the windows are deliberately asymmetrical.

This model accounts for a plethora of observations in real brains. In the rat hippocampus, memory sequences are played forward before navigation, and backward afterwards. And, every encoded sequence can be played back at different speeds, both forward and backward. This model does all of that, and provides a precise mathematical definition of the components of an episode and the form.in which they're stored.

This is huge, and no one even knows about it.

An STDP-based encoding method for associative and composite data - Scientific Reports

Spike-timing-dependent plasticity(STDP) is a biological process of synaptic modification caused by the difference of firing order and timing between neurons. One of neurodynamical roles of STDP is to form a macroscopic geometrical structure in the neuronal state space in response to a periodic...

You have to read through this in detail. There's a lot of math, but it's not too hard and it's intuitive. Mostly you have to understand how they build the "tag vector".

What they're showing us here, is pretty astounding. It answers a lot of questions all at the same time.

This model is showing us how memories are stored and retrieved in the brain.

And why "brain waves" are important for that.

STDP stands for "spike timing dependent plasticity", and is only slightly different from a Hebbian learning rule. The idea is synapses get reinforced on the basis of correlations between input and output.im this particular case the windows are deliberately asymmetrical.

This model accounts for a plethora of observations in real brains. In the rat hippocampus, memory sequences are played forward before navigation, and backward afterwards. And, every encoded sequence can be played back at different speeds, both forward and backward. This model does all of that, and provides a precise mathematical definition of the components of an episode and the form.in which they're stored.