Vastator

Platinum Member

- Oct 14, 2014

- 22,104

- 9,671

- 950

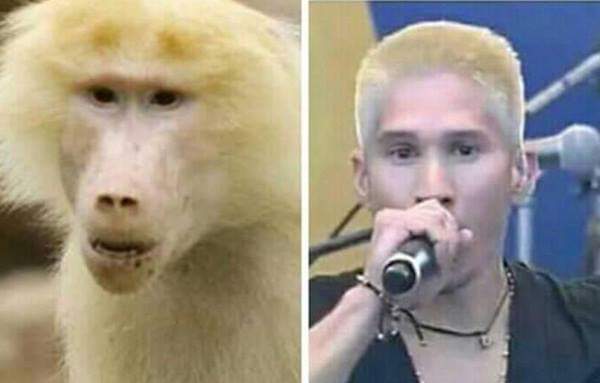

Despite countless attempts to resolve this issue; the only “fix” they could come up with... Was to disable this algorithm from identifying primates at all. Not really a fix. But apparently some people were getting rather upset at the algorithms short comings.

Google ‘fixed’ its racist algorithm by removing gorillas from its image-labeling tech

But it wasn’t just Googles AI that demonstrated this anomaly...

Google's solution to accidental algorithmic racism: ban gorillas

“ Flickr released a similar feature, auto-tagging – which had an almost identical set of problems.”

And there are many more. Given this glaring shortcoming in AI recognition technology; is the facial recognition software currently used by many agencies really trustworthy?

Google ‘fixed’ its racist algorithm by removing gorillas from its image-labeling tech

But it wasn’t just Googles AI that demonstrated this anomaly...

Google's solution to accidental algorithmic racism: ban gorillas

“ Flickr released a similar feature, auto-tagging – which had an almost identical set of problems.”

And there are many more. Given this glaring shortcoming in AI recognition technology; is the facial recognition software currently used by many agencies really trustworthy?