Nonlinearity-induced topological phase transition characterized by the nonlinear Chern number - Nature Physics

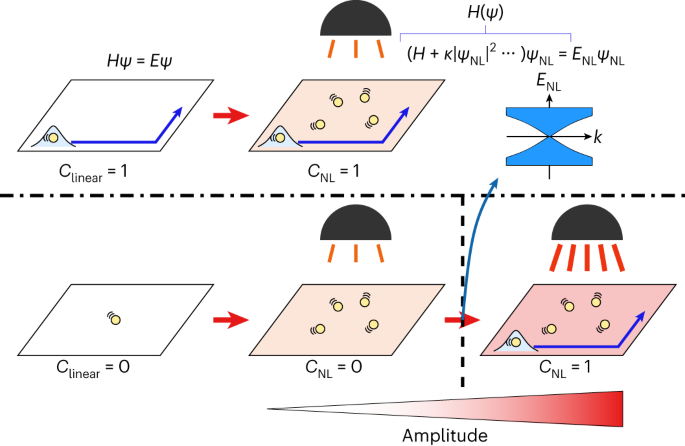

Linear topological systems can be characterized using invariants such as the Chern number. This concept can be extended to the nonlinear regime, giving rise to nonlinearity-induced topological phase transitions.

The article suggests a parallel and entirely different form of computation, for AI.

Traditionally, neural networks are linear-ish, even the transformers underlying Chat-GPT.

However this linked article points to a controllable alternate form of computation based on nonlinearity and criticality.

Of immense interest are the "phase transitions" that happen in small areas of the network, which when they couple lead to a global topology.

The same thing happens in ferromagnets and also in the biological cell.

The reason it's of interest is, I want to program my own personal AI myself. Everything needed is available online, including the training datasets for natural language and the like.