The current best knowledge is. It's called the scientific consensus. You are a scientific illiterate if you don't know that.Consensus isn't science, moron. Truth isn't determined by a majority vote.

But why am I stating the obvious?

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature currently requires accessing the site using the built-in Safari browser.

The current best knowledge is. It's called the scientific consensus. You are a scientific illiterate if you don't know that.Consensus isn't science, moron. Truth isn't determined by a majority vote.

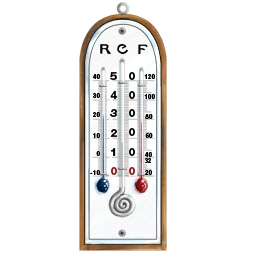

Probably because accurate thermometers were carefully calibrated. Please tell us why you are such a dullard.Please tell us how we have 1880 temperatures accurate to a tenth of a degree.

Please tell us how we have 1880 temperatures accurate to a tenth of a degree.

I've explained it to you and other deniers before, several times. Here are some examples of me doing that.

http://www.usmessageboard.com/posts/11774569/

http://www.usmessageboard.com/posts/13259890/

http://www.usmessageboard.com/posts/10425762/

And being that you're a mewling cult liar, you're still pretending to have never seen the explanations. You've proven yourself to be profoundly dishonest, so "fuck off, liar" is the only response owed to you by anyone.

Now, for those willing to learn, here's the answer Frank and his pals always run from.

Standard error - Wikipedia

---

SEM is usually estimated by the sample estimate of the population standard deviation (sample standard deviation) divided by the square root of the sample size (assuming statistical independence of the values in the sample):

where

s is the sample standard deviation (i.e., the sample-based estimate of the standard deviation of the population), and

n is the size (number of observations) of the sample.

---

That is, the more measurements you use in the average, the less the error of the average is. The error goes down proportionally to the square root of the number of measurements. If you average 10,000 measurements, the error of the average is 100 times less than the error of each individual measurement.

That's why measurements of average temperature from long ago can have such small errors. Most of the deniers here, including the ones claiming a science background, are shockingly clueless about such basic statistics. They'd literally fail a Statistics 101 class, which is how we know that the ones claiming such a science background are open frauds. Nobody who really has a science background would make such a bonehead error. And it won't matter that I just took the time to explain it to them, again. They'll just add statistics to their list of "Basic math and science which has been known for centuries which we now know is really totally wrong, because the denier cult says so."

The current best knowledge is. It's called the scientific consensus. You are a scientific illiterate if you don't know that.Consensus isn't science, moron. Truth isn't determined by a majority vote.

But why am I stating the obvious?

But it is the best knowledge we have. If we're going to act we should act on the best knowledge. It's not exactly rocket science to work that out."Best knowledge" isn't absolute truth, Majority vote doesn't determine scientific facts.

But it is the best knowledge we have. If we're going to act we should act on the best knowledge. It's not exactly rocket science to work that out."Best knowledge" isn't absolute truth, Majority vote doesn't determine scientific facts.

Again, absolute truth does not exist in scientific theories. Evidence and consensus is what counts. I don't know how many times I'll have to say that. I know, I'll let someone else say it for me.

Common misconceptions about science I: “Scientific proof”

https://www.psychologytoday.com

One of the most common misconceptions concerns the so-called “scientific proofs.” Contrary to popular belief, there is no such thing as a scientific proof.

Proofs exist only in mathematics and logic, not in science. Mathematics and logic are both closed, self-contained systems of propositions, whereas science is empirical and deals with nature as it exists. The primary criterion and standard of evaluation of scientific theory is evidence, not proof. All else equal (such as internal logical consistency and parsimony), scientists prefer theories for which there is more and better evidence to theories for which there is less and worse evidence. Proofs are not the currency of science.

Proofs have two features that do not exist in science: They are final, and they are binary. Once a theorem is proven, it will forever be true and there will be nothing in the future that will threaten its status as a proven theorem (unless a flaw is discovered in the proof). Apart from a discovery of an error, a proven theorem will forever and always be a proven theorem.

In contrast, all scientific knowledge is tentative and provisional, and nothing is final. There is no such thing as final proven knowledge in science. The currently accepted theory of a phenomenon is simply the best explanation for it among all available alternatives. Its status as the accepted theory is contingent on what other theories are available and might suddenly change tomorrow if there appears a better theory or new evidence that might challenge the accepted theory. No knowledge or theory (which embodies scientific knowledge) is final. That, by the way, is why science is so much fun.

Fake news using statistics to give the impression of accuracy

Fake news using statistics to give the impression of accuracy

Yep, cultist Frank is now completely off the rails. He's actually screaming that the statistics laws that have been known for centuries are "fake news".

Sadly, that sort of butthurt insanity is now seen across the entire denier cult. The whole cult considers it a point of pride to be stupid and totally detached from reality, and looks with paranoid suspicion upon any intelligent person who uses liberal concepts like "facts" and "data".

The temperature measurements you generally see plotted on a graph are an average over a year with many thermometers. When you average just 100 readings you can get 1/10 degree accuracy since the standard deviation is proportional to the inverse of the square root of the number of readings.Probably because accurate thermometers were carefully calibrated. Please tell us why you are such a dullard.Please tell us how we have 1880 temperatures accurate to a tenth of a degree.

Thermometers weren't accurate to a tenth of a degree in 1880. In fact, it's only a few short decades ago we were accurate to that degree. Why pretend we have data that can't possibly exist?

You are a fuckwit. It's not a good theory until the scientific community agrees it's a good theory because it has good evidence.One man with a good theory can defeat the entire scientific community.

The temperature measurements you generally see plotted on a graph are an average over a year with many thermometers. When you average just 100 readings you can get 1/10 degree accuracy since the standard deviation is proportional to the inverse of the square root of the number of readings.Probably because accurate thermometers were carefully calibrated. Please tell us why you are such a dullard.Please tell us how we have 1880 temperatures accurate to a tenth of a degree.

Thermometers weren't accurate to a tenth of a degree in 1880. In fact, it's only a few short decades ago we were accurate to that degree. Why pretend we have data that can't possibly exist?

That is why you can see a higher degree of precision of a variable when the number of readings for just one thermometer is 365 readings per year.

However that doesn't mean that the earth's average temperature was measured accurately. It only means that results can be plotted on a graph with 1/10 degree of accuracy.

Accuracy of yearly temperature rise is even greater with linear regression.

You really are a dullard. How many lab or meteorological thermometers have you read? Yet you witter on as though you have a clue.Thermometers weren't accurate to a tenth of a degree in 1880. In fact, it's only a few short decades ago we were accurate to that degree. Why pretend we have data that can't possibly exist?

You didn't understand the post! No link is needed. As I said a single thermometer will show around 365 readings in one year.The temperature measurements you generally see plotted on a graph are an average over a year with many thermometers. When you average just 100 readings you can get 1/10 degree accuracy since the standard deviation is proportional to the inverse of the square root of the number of readings.Probably because accurate thermometers were carefully calibrated. Please tell us why you are such a dullard.Please tell us how we have 1880 temperatures accurate to a tenth of a degree.

Thermometers weren't accurate to a tenth of a degree in 1880. In fact, it's only a few short decades ago we were accurate to that degree. Why pretend we have data that can't possibly exist?

That is why you can see a higher degree of precision of a variable when the number of readings for just one thermometer is 365 readings per year.

However that doesn't mean that the earth's average temperature was measured accurately. It only means that results can be plotted on a graph with 1/10 degree of accuracy.

Accuracy of yearly temperature rise is even greater with linear regression.

Can you please link to the data set showing "100 readings" from 1880?

Thank you

You didn't understand the post! No link is needed. As I said a single thermometer will show around 365 readings in one year.The temperature measurements you generally see plotted on a graph are an average over a year with many thermometers. When you average just 100 readings you can get 1/10 degree accuracy since the standard deviation is proportional to the inverse of the square root of the number of readings.Probably because accurate thermometers were carefully calibrated. Please tell us why you are such a dullard.Please tell us how we have 1880 temperatures accurate to a tenth of a degree.

Thermometers weren't accurate to a tenth of a degree in 1880. In fact, it's only a few short decades ago we were accurate to that degree. Why pretend we have data that can't possibly exist?

That is why you can see a higher degree of precision of a variable when the number of readings for just one thermometer is 365 readings per year.

However that doesn't mean that the earth's average temperature was measured accurately. It only means that results can be plotted on a graph with 1/10 degree of accuracy.

Accuracy of yearly temperature rise is even greater with linear regression.

Can you please link to the data set showing "100 readings" from 1880?

Thank you

I said one reading a day. That's 365 a year.Since you're committed to statistical dishonesty, you can say that one thermometer took a reading a second and therefore had 81,000 reading in a single day! So you're probably accurate to a hundredth of a degree back in 1880 notwithstanding the 5F MOE

You are a fuckwit. It's not a good theory until the scientific community agrees it's a good theory because it has good evidence.One man with a good theory can defeat the entire scientific community.

'Defeat the entire scientific community' !

All you do is reveal greater depths of ignorance.

So when did it become the scientific consensus? See how it works, science illiterate?The entire scientific community refused to accept it

I said one reading a day. That's 365 a year.Since you're committed to statistical dishonesty, you can say that one thermometer took a reading a second and therefore had 81,000 reading in a single day! So you're probably accurate to a hundredth of a degree back in 1880 notwithstanding the 5F MOE

You didn't understand the post! No link is needed. As I said a single thermometer will show around 365 readings in one year.The temperature measurements you generally see plotted on a graph are an average over a year with many thermometers. When you average just 100 readings you can get 1/10 degree accuracy since the standard deviation is proportional to the inverse of the square root of the number of readings.Probably because accurate thermometers were carefully calibrated. Please tell us why you are such a dullard.Please tell us how we have 1880 temperatures accurate to a tenth of a degree.

Thermometers weren't accurate to a tenth of a degree in 1880. In fact, it's only a few short decades ago we were accurate to that degree. Why pretend we have data that can't possibly exist?

That is why you can see a higher degree of precision of a variable when the number of readings for just one thermometer is 365 readings per year.

However that doesn't mean that the earth's average temperature was measured accurately. It only means that results can be plotted on a graph with 1/10 degree of accuracy.

Accuracy of yearly temperature rise is even greater with linear regression.

Can you please link to the data set showing "100 readings" from 1880?

Thank you