As is well known, digital computers are incapable of generating truly random numbers.

The best they can do is "quasi-random", which means they look random but will repeat after a long enough time.

Turns out, quantum land is the one and only place in the universe where we get truly random behavior.

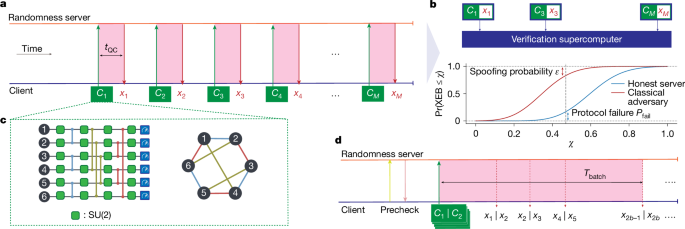

And now for the first time, quantum computers are proven to be able to harness this behavior.

From the standpoint of physics, randomness is a primary property of the quantum universe. No one knows how it happens. One of the very interesting things about it is, its distribution is flat, not Gaussian. In other words, it disobeys the law of large numbers.

Which in turn means that there's a unitary process underlying it. (As distinct from a bunch of little processes).

Nothing in this universe would be possible without the randomness. Everything from our consciousness to light itself depends on it.

Mathematically, it is entirely unclear whether there is any connection between randomness and quantization. No one knows if these are different processes, or part of the same process. Quantization is associated with counting, whereas randomness falls into the "uncountable" category. One can think of this in terms of the difference between the integers and the reals. The integers are "countably infinite" whereas the reals are "uncountable".

The relationship between the two was explored by the mathematician Georg Cantor, who discovered the famous Cantor Dust. It works like this:

Quantum processes generate random real numbers between 0 and 1. So take the interval (0,1) and chop out the middle third. (Which means you know have two intervals remaining, the left third and the right third, each of which have length 1/3). For every remaining interval, chop out the middle third, and keep doing this recursively an infinite number of times. You end up with a "dust", and what Cantor proved is that this dust has the same number of points as the interval it started from. The dust has the same number of points as the entire interval (0,1), its cardinality is the same.

This remarkable and counterintuitive proof arises because we're trying to count the reals. Which, apparently, doesn't work. In math, 'points' are countable, and topology changes this into the concept of "neighborhoods", which overlap in uncountable ways.

So when we generate a random number, we're not really generating a 'point', we're generating a neighborhood. This concept is driven home in probability theory. If you have a continuous distribution with probabilities on the interval (0,1), the probability of getting "exactly" .5 is ZERO. However the probability of getting a result in an epsilon-neighborhood around .5 is finite and positive. In other words you have to integrate over the neighborhood to get a non-zero probability.

The best they can do is "quasi-random", which means they look random but will repeat after a long enough time.

Turns out, quantum land is the one and only place in the universe where we get truly random behavior.

And now for the first time, quantum computers are proven to be able to harness this behavior.

Quantum computer generates strings of certifiably random numbers – Physics World

Quantinuum’s trapped-ion computer has also been used to study problems in quantum magnetism and knot theory

physicsworld.com

From the standpoint of physics, randomness is a primary property of the quantum universe. No one knows how it happens. One of the very interesting things about it is, its distribution is flat, not Gaussian. In other words, it disobeys the law of large numbers.

Which in turn means that there's a unitary process underlying it. (As distinct from a bunch of little processes).

Nothing in this universe would be possible without the randomness. Everything from our consciousness to light itself depends on it.

Mathematically, it is entirely unclear whether there is any connection between randomness and quantization. No one knows if these are different processes, or part of the same process. Quantization is associated with counting, whereas randomness falls into the "uncountable" category. One can think of this in terms of the difference between the integers and the reals. The integers are "countably infinite" whereas the reals are "uncountable".

The relationship between the two was explored by the mathematician Georg Cantor, who discovered the famous Cantor Dust. It works like this:

Quantum processes generate random real numbers between 0 and 1. So take the interval (0,1) and chop out the middle third. (Which means you know have two intervals remaining, the left third and the right third, each of which have length 1/3). For every remaining interval, chop out the middle third, and keep doing this recursively an infinite number of times. You end up with a "dust", and what Cantor proved is that this dust has the same number of points as the interval it started from. The dust has the same number of points as the entire interval (0,1), its cardinality is the same.

This remarkable and counterintuitive proof arises because we're trying to count the reals. Which, apparently, doesn't work. In math, 'points' are countable, and topology changes this into the concept of "neighborhoods", which overlap in uncountable ways.

So when we generate a random number, we're not really generating a 'point', we're generating a neighborhood. This concept is driven home in probability theory. If you have a continuous distribution with probabilities on the interval (0,1), the probability of getting "exactly" .5 is ZERO. However the probability of getting a result in an epsilon-neighborhood around .5 is finite and positive. In other words you have to integrate over the neighborhood to get a non-zero probability.