Synthaholic

Diamond Member

- Jul 21, 2010

- 75,787

- 73,508

- 3,605

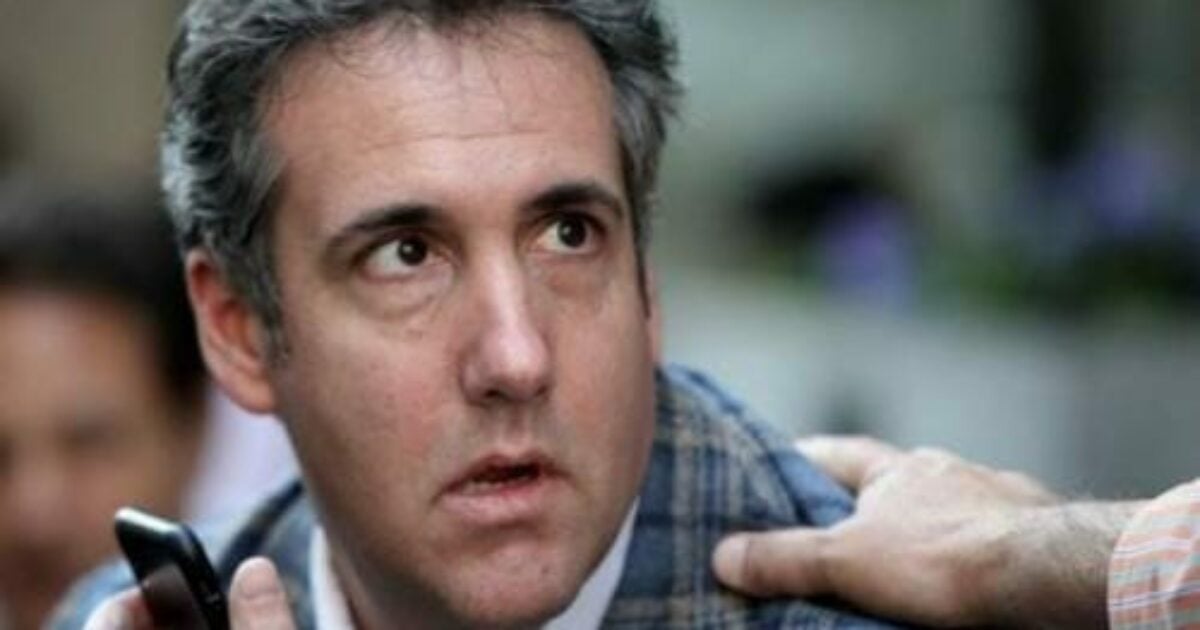

I'm saying that if it's some kind of "gotcha" on Michael Cohen, it's a huge nothingburger.I really don't think you have considered the implications of AI. It's not a nothingburger, lawyers submitting fiction written by Google programs. Imagine if the judge did not check.

It is also quite notable that Cohen got nailed doing it. It's like cheating on a term paper, or plagiarizing a thesis.

Just wait until AI is designing buildings and airplanes.

In theory, it should get better than humans at what it does. So far, it's not really there, but it can do stuff like write decent poetry, and it can do it instantly. Most humans can't do it given much more time.

Self-driving cars are another story. Been a long time coming, and they are fraught with problems for now.

AI is not to be trusted for quite a while. AI 'hallucinates' in it's current form.

AI hallucinations refer to the problem where generative AI models create confident, plausible outputs that seem like facts, but are in fact completely made up by the model1234. This problem is often caused by limitations or biases in training data and algorithms1. The outputs generated by the model may be factually incorrect or unrelated to the given context3. AI hallucinations can result in producing content that is wrong or even harmful1.