Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature currently requires accessing the site using the built-in Safari browser.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

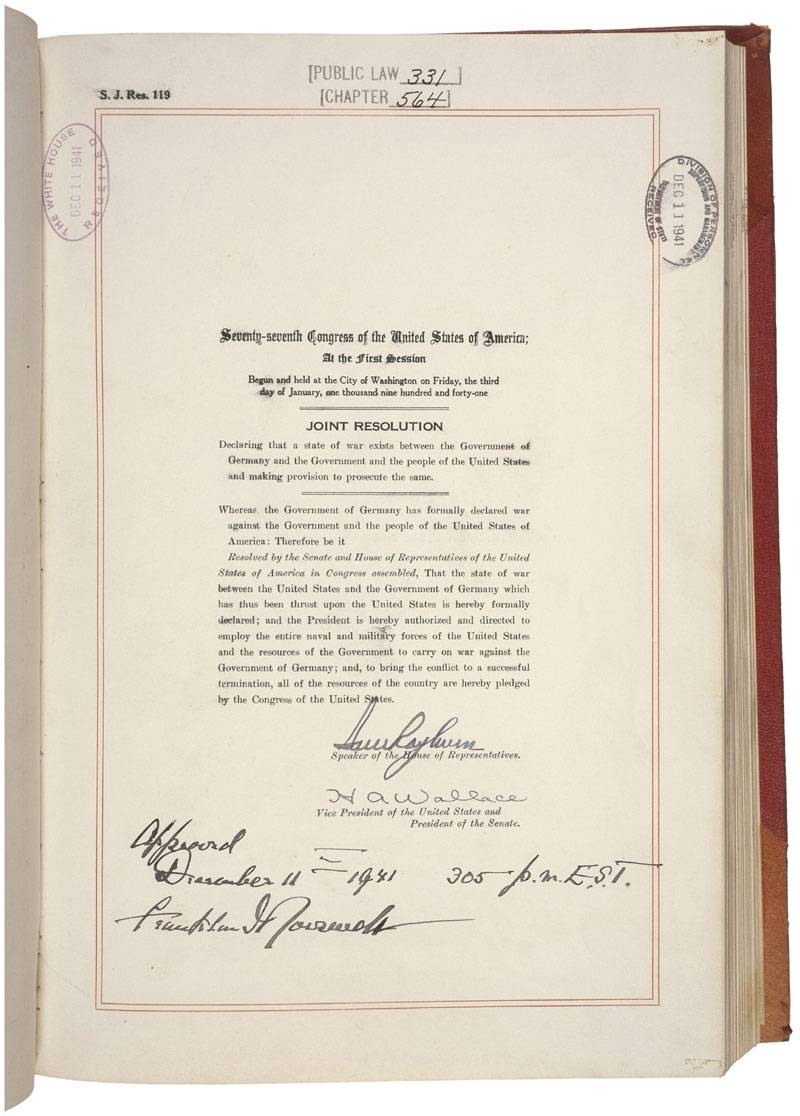

Did the US ever declare war on Germany after PH?

- Thread starter ginscpy

- Start date

BolshevikHunter

Rookie

- Banned

- #2

Think Hitler beat them to the punch - declared war on the US. (bad move)

Not sure if the US ever declared war on Germany

Yes, Hitler declared War on The USA after Pearl Harbor. He had no choice because of Germany's axis treaty with Japan. I am pretty sure that we decared war on Germany at the same time as Japan because of that same reason, they were allies. Japan never told Hitler they were gonna attack Hawaii. In fact, The agreement was that Japan would attack Russia from the East. ~BH

Midnight Marauder

Rookie

- Feb 28, 2009

- 12,404

- 1,939

- 0

- Banned

- #3

Reading is neat.

Similar threads

- Replies

- 18

- Views

- 247

- Replies

- 18

- Views

- 227

Latest Discussions

- Replies

- 5

- Views

- 98

- Replies

- 7K

- Views

- 49K

Forum List

-

-

-

-

-

Political Satire 8040

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

ObamaCare 781

-

-

-

-

-

-

-

-

-

-

-

Member Usernotes 469

-

-

-

-

-

-

-

-

-

-