During WW2 there was a lot of interest in analog computing, for missiles and radar and stuff like that. They started looking at neurons, and in 1942 a biologist and a mathematician came up with the first artificial neuron. Called the McCulloch-Pitts neuron after its inventors, it was a simple linear integrator.

towardsdatascience.com

towardsdatascience.com

A lot of people still have this idea about neurons, that they sum their synapses and either fire or don't fire. And, a lot can be done with that. Beginning with the Perceptron in 1953, and proceeding through the popular Hopfield model in 1982, artificial neural networks are very good at linear classification and estimation.

www.geeksforgeeks.org

www.geeksforgeeks.org

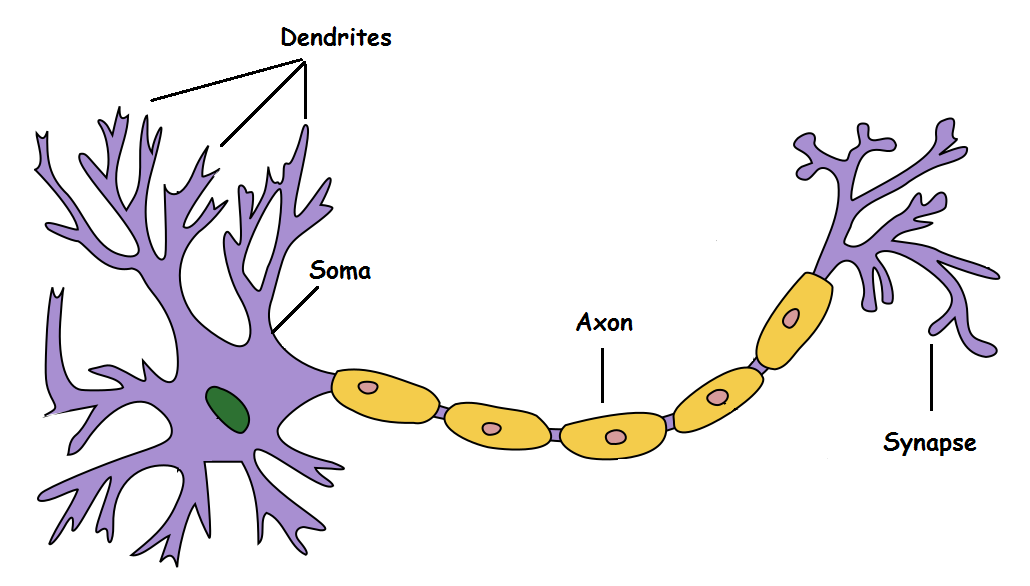

Unfortunately, this is not really how a neuron works, or what it is.

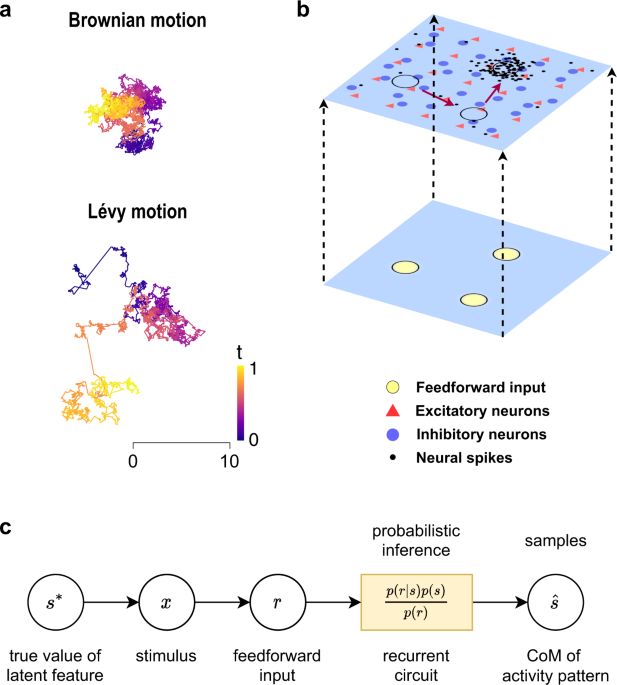

A neuron is an unstable oscillator, operating on the edge of criticality.

It works on different kinds of currents (ionic, mostly), that cross the membrane under controlled conditions. But even the well known Hodgkin-Huxley model is far too simple.

Here is a modern analysis of what a neuron actually looks like, and how it behaves.

They're looking at "post inhibitory rebound", which turns out to be a damped oscillation.

The paper shows how an inhibitory synapse can suddenly and magically turn into an excitatory synapse, depending on what's going on in the postsynaptic cell.

Synapses, it turns out, implement the first several orders of Laguerre filtering. This allows populations of neurons to model the nonlinear relationships between inputs, with what amounts to a Volterra decomposition.

This portion of the neural population behavior is basically the "impulse response". The Volterra method works fine with fading memory (which includes things like adaptation and potentiation and other forms of plasticity), but it doesn't work as well in chaotic contexts. Coupled oscillators obey the Kuramoto dynamics, which looks a lot like the spatial patterns in a Belousov-Zhabotinsky reaction, or the "domains" in an Ising model - so the Volterra method can be adjusted to analyze locally around "hot spots" in the input.

www.sciencedirect.com

www.sciencedirect.com

This perspective has forced a reconsideration of almost every aspect of modern neuroscience. For example the "on center" and "off center" receptive fields no longer make sense, there are only bipolar cells that are either depolarized or hyperpolarized by the photoreceptors, and they are nonlinear and have differing time constants. What was once thought to be a simple retina with only 5 cell types, is now known to be highly complex, with 10 layers of neural connections and more than 60 genetically identifiable cell types with specific branching patterns.

Some of the connections in the retina, will cause a ganglion cell to change from an ordinary integrative mode, to a "bursting" mode. Previously these were identified as inhibitory synapses, but now we know they're doing something different. Yes, they inhibit, in the ordinary integrative mode. But in bursting mode, they're responsible for the timing of the inter-spike intervals.

Neurons turn out to be pretty smart. They carry several different types of codes at the same time, and they respond to the time varying properties of the input signal. If you're interested, check out the sections called Dynamical and Functional:

www.scholarpedia.org

www.scholarpedia.org

McCulloch-Pitts Neuron — Mankind’s First Mathematical Model Of A Biological Neuron

It is very well known that the most fundamental unit of deep neural networks is called an artificial neuron/perceptron. But the very first…

towardsdatascience.com

towardsdatascience.com

A lot of people still have this idea about neurons, that they sum their synapses and either fire or don't fire. And, a lot can be done with that. Beginning with the Perceptron in 1953, and proceeding through the popular Hopfield model in 1982, artificial neural networks are very good at linear classification and estimation.

Implementing Models of Artificial Neural Network - GeeksforGeeks

A Computer Science portal for geeks. It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company interview Questions.

www.geeksforgeeks.org

www.geeksforgeeks.org

Unfortunately, this is not really how a neuron works, or what it is.

A neuron is an unstable oscillator, operating on the edge of criticality.

It works on different kinds of currents (ionic, mostly), that cross the membrane under controlled conditions. But even the well known Hodgkin-Huxley model is far too simple.

Here is a modern analysis of what a neuron actually looks like, and how it behaves.

They're looking at "post inhibitory rebound", which turns out to be a damped oscillation.

The paper shows how an inhibitory synapse can suddenly and magically turn into an excitatory synapse, depending on what's going on in the postsynaptic cell.

Synapses, it turns out, implement the first several orders of Laguerre filtering. This allows populations of neurons to model the nonlinear relationships between inputs, with what amounts to a Volterra decomposition.

This portion of the neural population behavior is basically the "impulse response". The Volterra method works fine with fading memory (which includes things like adaptation and potentiation and other forms of plasticity), but it doesn't work as well in chaotic contexts. Coupled oscillators obey the Kuramoto dynamics, which looks a lot like the spatial patterns in a Belousov-Zhabotinsky reaction, or the "domains" in an Ising model - so the Volterra method can be adjusted to analyze locally around "hot spots" in the input.

A note on the optimal expansion of Volterra models using Laguerre functions

This work tackles the problem of expanding Volterra models using Laguerre functions. A strict global optimal solution is derived when each multidimens…

www.sciencedirect.com

www.sciencedirect.com

This perspective has forced a reconsideration of almost every aspect of modern neuroscience. For example the "on center" and "off center" receptive fields no longer make sense, there are only bipolar cells that are either depolarized or hyperpolarized by the photoreceptors, and they are nonlinear and have differing time constants. What was once thought to be a simple retina with only 5 cell types, is now known to be highly complex, with 10 layers of neural connections and more than 60 genetically identifiable cell types with specific branching patterns.

Some of the connections in the retina, will cause a ganglion cell to change from an ordinary integrative mode, to a "bursting" mode. Previously these were identified as inhibitory synapses, but now we know they're doing something different. Yes, they inhibit, in the ordinary integrative mode. But in bursting mode, they're responsible for the timing of the inter-spike intervals.

Neurons turn out to be pretty smart. They carry several different types of codes at the same time, and they respond to the time varying properties of the input signal. If you're interested, check out the sections called Dynamical and Functional: