Verity Jones has two nice articles on how inclusion or exclusion of temperature anomalies can make a difference in constructing global data sets

The trouble with anomalies… Part 1 | Digging in the Clay

The trouble with anomalies… Part 2 | Digging in the Clay

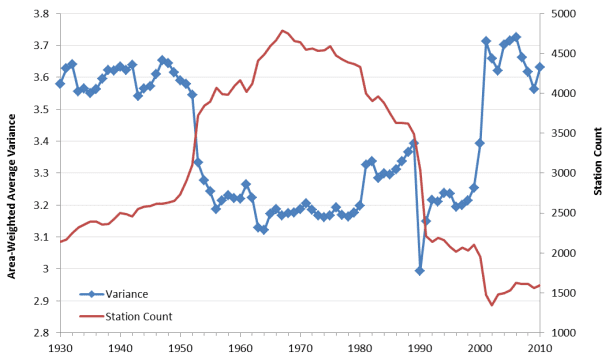

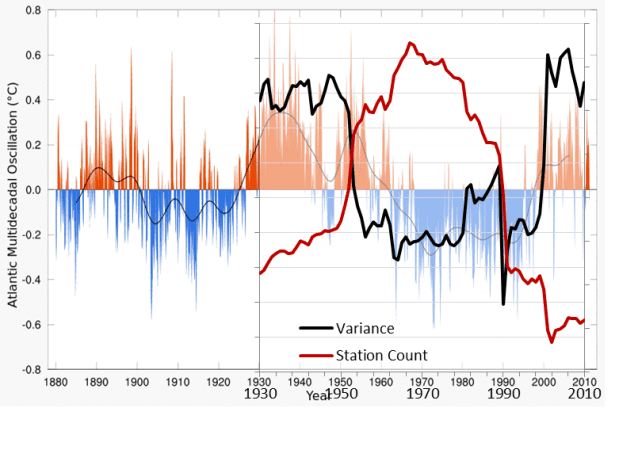

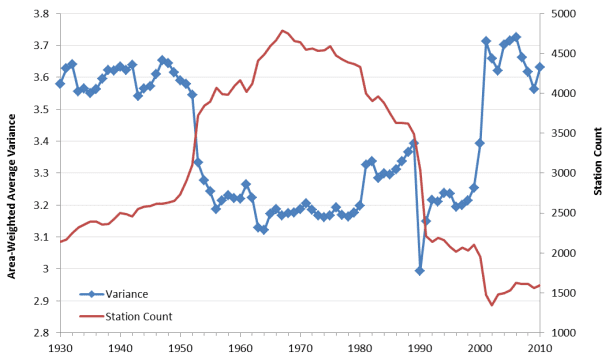

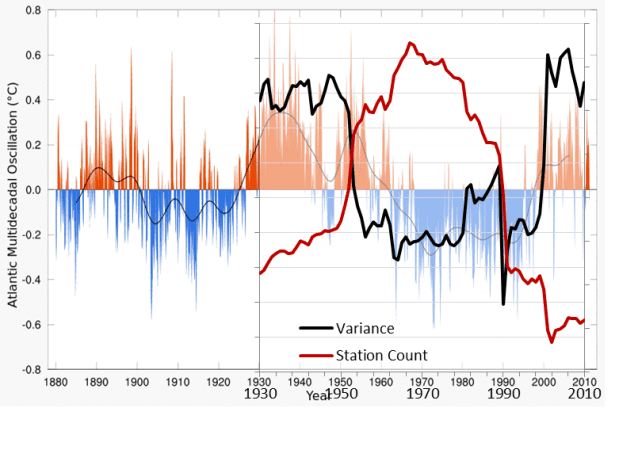

but the really funny punchline was at the end! he compared the whole thing to the AMO and it matched pretty well. hahaha. the warmists say that everything is known and controlled for but they are full of BS. every area of climate studies look OK superficially but it all breaks down once you look at things more carefully or if you add other variables. the science is not settled people!

I know most people dont really care to figure out how any of the science behind the climate wars actually works but a whole lot of information is out there.

The trouble with anomalies… Part 1 | Digging in the Clay

The trouble with anomalies… Part 2 | Digging in the Clay

but the really funny punchline was at the end! he compared the whole thing to the AMO and it matched pretty well. hahaha. the warmists say that everything is known and controlled for but they are full of BS. every area of climate studies look OK superficially but it all breaks down once you look at things more carefully or if you add other variables. the science is not settled people!

I know most people dont really care to figure out how any of the science behind the climate wars actually works but a whole lot of information is out there.