Windparadox

Gold Member

`

`

`

`

`

`

News and politics forums (as well as social forums) are notorious for false and misleading news. While I always like to at least double check sources, there are a few news sites that I hold with high credibility and others, little or none. Checking sources is time consuming, as the article states. While I will never fully trust a computer and it's algorithms, this is a step in the right direction.

`

"Machine learning system aims to determine if an information outlet is accurate or biased. (Massachusetts Institute of Technology) - Lately the fact-checking world has been in a bit of a crisis. Sites like Politifact and Snopes have traditionally focused on specific claims, which is admirable but tedious; by the time they’ve gotten through verifying or debunking a fact, there’s a good chance it’s already traveled across the globe and back again.

Social media companies have also had mixed results limiting the spread of propaganda and misinformation. Facebook plans to have 20,000 human moderators by the end of the year, and is putting significant resources into developing its own fake-news-detecting algorithms.

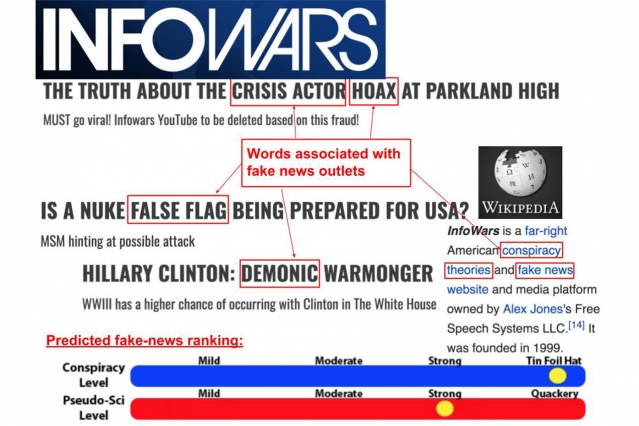

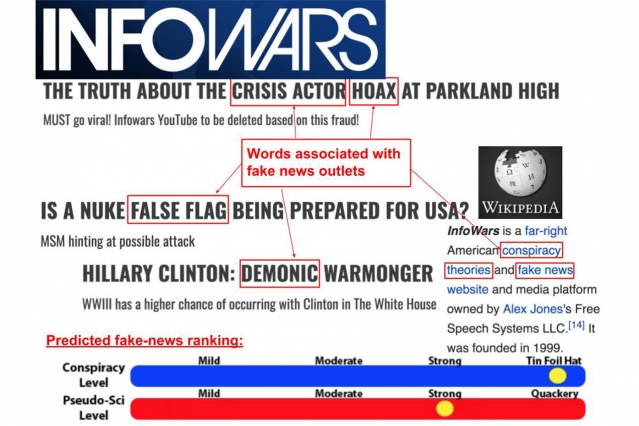

Researchers from MIT’s Computer Science and Artificial Intelligence Lab (CSAIL) and the Qatar Computing Research Institute (QCRI) believe that the best approach is to focus not only on individual claims, but on the news sources themselves. Using this tack, they’ve demonstrated a new system that uses machine learning to determine if a source is accurate or politically biased.

“If a website has published fake news before, there’s a good chance they’ll do it again,” says postdoc Ramy Baly, the lead author on a new paper about the system. “By automatically scraping data about these sites, the hope is that our system can help figure out which ones are likely to do it in the first place.”

Baly says the system needs only about 150 articles to reliably detect if a news source can be trusted — meaning that an approach like theirs could be used to help stamp out new fake-news outlets before the stories spread too widely. - Source

Social media companies have also had mixed results limiting the spread of propaganda and misinformation. Facebook plans to have 20,000 human moderators by the end of the year, and is putting significant resources into developing its own fake-news-detecting algorithms.

Researchers from MIT’s Computer Science and Artificial Intelligence Lab (CSAIL) and the Qatar Computing Research Institute (QCRI) believe that the best approach is to focus not only on individual claims, but on the news sources themselves. Using this tack, they’ve demonstrated a new system that uses machine learning to determine if a source is accurate or politically biased.

“If a website has published fake news before, there’s a good chance they’ll do it again,” says postdoc Ramy Baly, the lead author on a new paper about the system. “By automatically scraping data about these sites, the hope is that our system can help figure out which ones are likely to do it in the first place.”

Baly says the system needs only about 150 articles to reliably detect if a news source can be trusted — meaning that an approach like theirs could be used to help stamp out new fake-news outlets before the stories spread too widely. - Source

`

`

News and politics forums (as well as social forums) are notorious for false and misleading news. While I always like to at least double check sources, there are a few news sites that I hold with high credibility and others, little or none. Checking sources is time consuming, as the article states. While I will never fully trust a computer and it's algorithms, this is a step in the right direction.

`